by Ashis Pati

Over the course of the evolution of rock, electric guitar solos have developed into an important feature of any rock song. Their popularity among rock music fans is reflected by lists found online such as here and here. The ability to automatically detect guitar solos could, for example, be used by music browsing and streaming services (like Apple Music and Spotify) to create targeted previews of rock songs. Such an algorithm would also be useful as a pre-processing step for other tasks such as guitar playing style analysis.

What is a Solo?

Even though most listeners can easily identify the location of a guitar solo within a song, it is not a trivial problem for a machine. Looking at it from an audio signal perspective, solos can be very similar to some of the other techniques such as riffs or licks.

Therefore, we define a guitar solo as having the following characteristics:

- The guitar is in the foreground compared to other instruments

- The guitar plays improvised melodic phrases which don’t repeat over measures (differentiate from a riff)

- The section is larger than a few measures (differentiate from a lick)

What about Data?

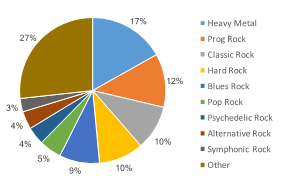

In the absence of any annotated dataset of guitar solos, we decided to create a pilot dataset containing 60 full-length rock songs and annotated the location of the guitar solos within the song. Some of the songs contained in the dataset include classics like “Stairway to Heaven,” “Alive,” and “Hotel California.” The sub-genre distribution of the dataset is shown in Fig. 1.

What Descriptors can be used to discriminate solos?

The widespread use of effect pedal boards and amps results in a plethora of different electric guitar “sounds,” possibly almost as large as the number of solos themselves. Hence, finding audio descriptors capable of discriminating a solo from a non-solo part is NOT a trivial task. To gauge how difficult this actually is, we implemented a Support Vector Machine (SVM) based supervised classification system (see the overall block diagram in Fig. 2).

The widespread use of effect pedal boards and amps results in a plethora of different electric guitar “sounds,” possibly almost as large as the number of solos themselves. Hence, finding audio descriptors capable of discriminating a solo from a non-solo part is NOT a trivial task. To gauge how difficult this actually is, we implemented a Support Vector Machine (SVM) based supervised classification system (see the overall block diagram in Fig. 2).

In addition to the more ubiquitous spectral and temporal audio descriptors (such as Spectral Centroid, Spectral Flux, Mel-Frequency Cepstral Coefficients etc.), we examine two specific class of descriptors which intuitively should have better capacity to differentiate solo segments from non-solo segments.

- Descriptors from Fundamental Pitch estimation:

A guitar solo is primarily a melodic improvisation and hence, can be expected to have a distinctive fundamental frequency component which would be different from that of another instrument (say a bass guitar). In addition, during a solo the guitar will have a stronger presence in the audio mix which can be measured using the strength of the fundamental frequency component. - Descriptors from Structural Segmentation:

A guitar solo generally doesn’t repeat in a song and hence, would not occur in repeated segments of a song (e.g., chorus, song). This allows to leverage existing structural segmentation algorithms in a novel way. A measure of the number of times a segment has been repeated in a song and the normalized length of the segment can serve as useful inputs to the classifier.

By using these features and post-processing to group the identified solo segments together, we obtain a detection accuracy of nearly 78%.

The main purpose of this study was to provide a framework against which more sophisticated solo detection algorithms can be examined. We use relatively simple features to perform a rather complicated task. The performance of features based on structural segmentation is encouraging and warrants further research into developing better features. For interested readers, the full paper as presented at the 2017 AES Conference on Semantic Audio can be found here.